Getting the Hasivo F1100W-4SX-4XGT switch to work

TL:DR The missing (documentation) for this otherwise fine switch

I was first exposed to Cisco network equipment in 1995, and for a long time I used their gear for my home network. Then around 2012 I switched to Juniper switches for their more sane management interface and more reasonable prices. Neither have WiFI equipment that’s really appropriate for a home setting, however, with onerous licensing terms or crackpot schizophrenic hardware like my old Cisco 877W that was one half ADSL router and one half WiFi AP (coexisting uneasily in the same physical box with separate management interfaces).

By the time I got fed up with single consumer APs, their lack of coverage and tendency to burn out within a year due to inadequate power supplies, I bit the bullet, went with the Ubiquiti Networks UniFi solution that I use to the day. At one point I considered switching to TP-Link Omada, but procrastination paid off, and I dodged a bullet.

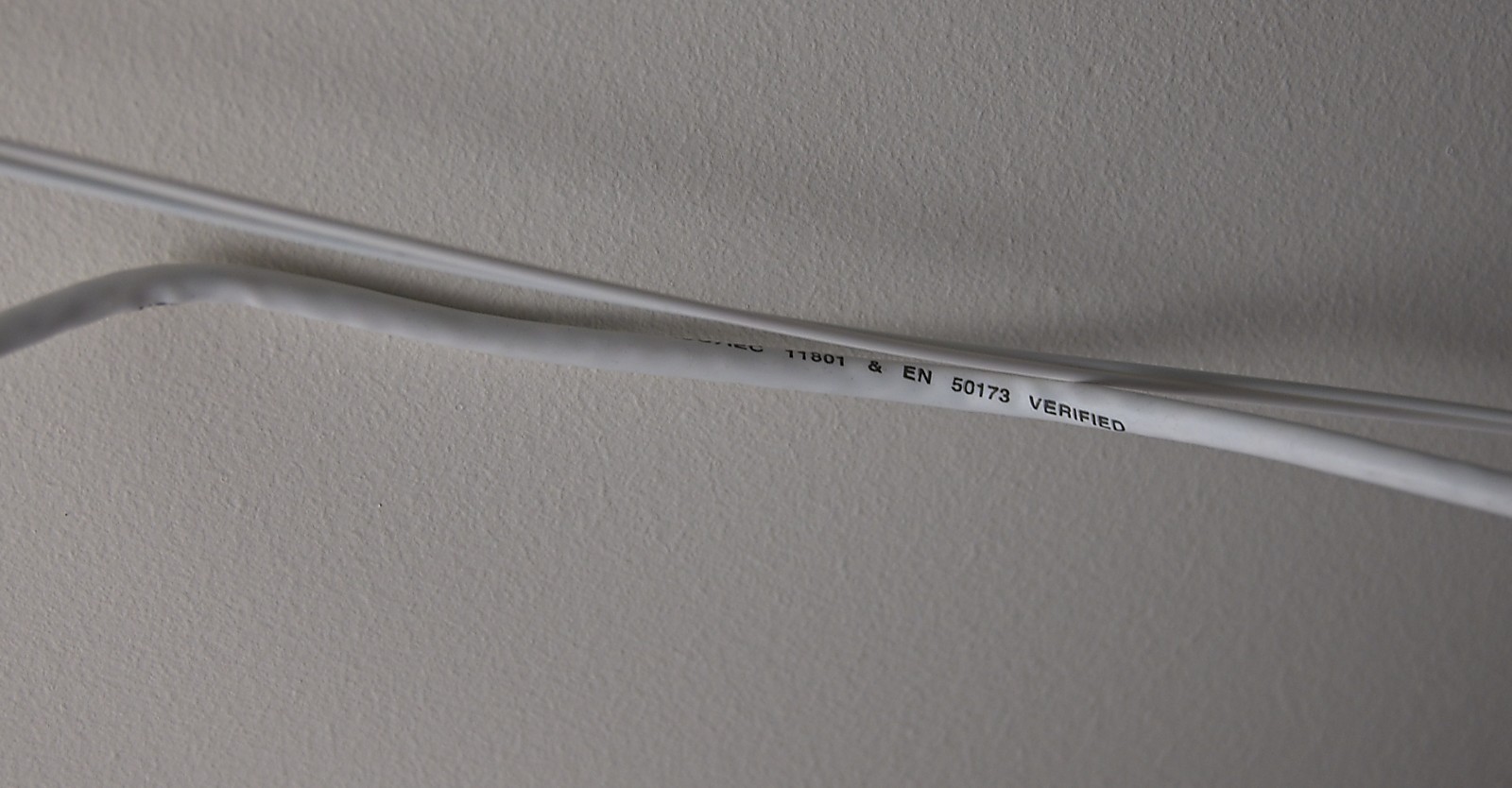

Unfortunately, Ubiquiti doesn’t have switches with both SFP+ interfaces (for fiber optic connections) and 10G-BaseT (for copper like on my Mac Studio), other than the expensive, bulky and non-fanless Pro HD 24. While you can easily get 10G-BaseT copper SFP+ modules, the power draw of a 10G-BaseT port is actually more than the nominal power capacity of a SFP+ port and in my experience they are unreliable. For a while, I used the ZyXEL XGS1250-12, which has 3 2.5G/10G copper and one SFP+, but I would prefer a switch with more SFP+ ports.

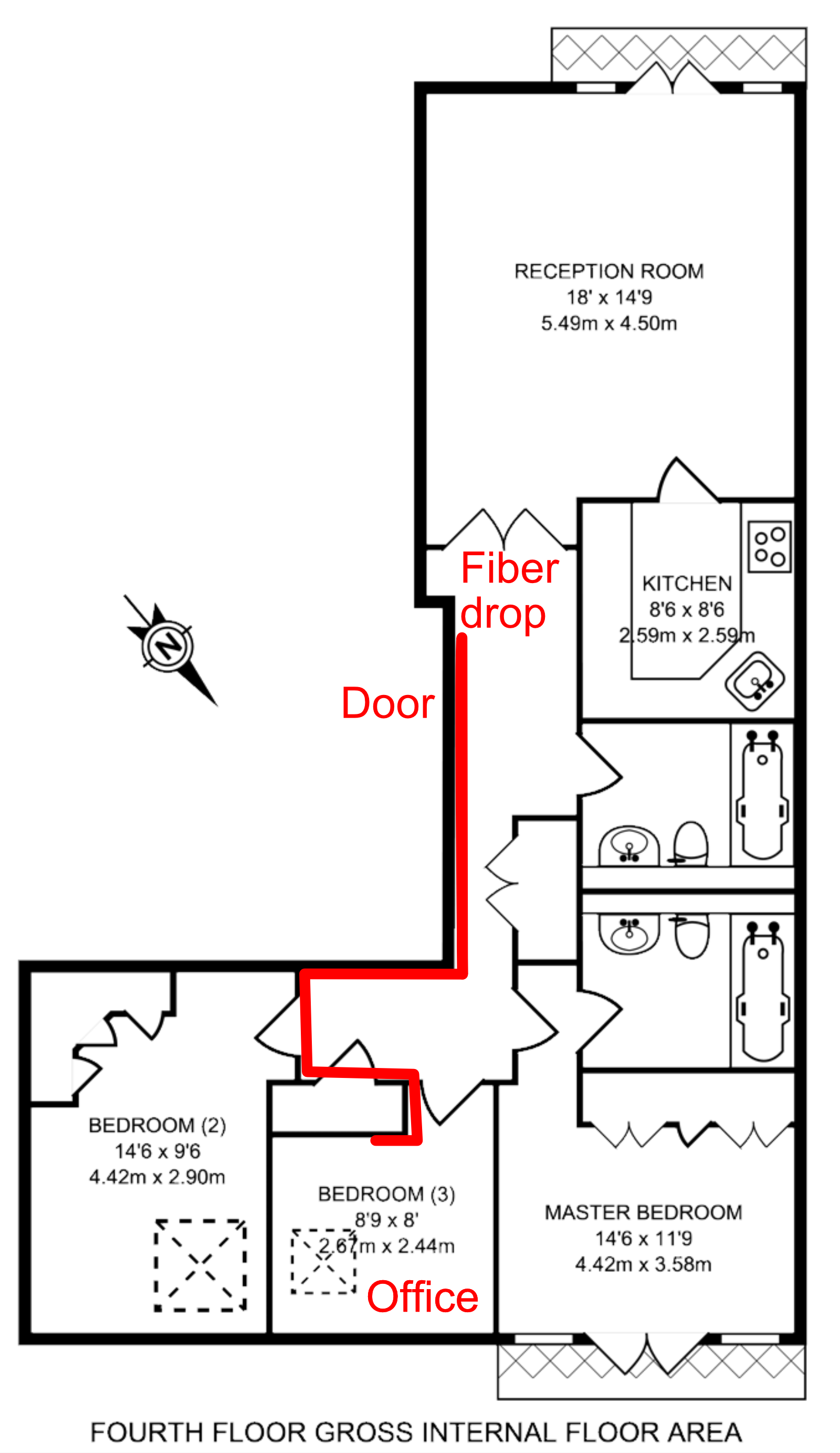

After digging through reviews, and ServeTheHome, I found out about Hasivo switches, an inexpensive Chinese brand offering great value for money and interesting port configurations. Their F1100W-4SX-4XGT offers 4x SFP+ and 4x 2.5G/10G copper ports for £152.39 plus VAT, so I ordered one. When I received it, I plugged it into my home office Ubiquiti USW Pro Max 16 PoE with a Ubiquiti 10G DAC cable, plugged my Mac, and everything just worked as it should.

The Power and RET LEDs were flashing red and green, however. Furthermore, this

is supposed to be a L3-capable switch with a Web UI, but no DHCP request or IP

appeared in my UniFi console or IP address (spoiler: it’s 192.168.0.1, and

DHCP is not enabled by default). The switch did not include any documentation,

there is nothing available on the Hasivo site, not even in Chinese (they have

documentation links, but they point to a completely different product, and

even then are largely useless).

Here’s how I got it to work, using information gleaned from various Internet forums:

- First, get a Cisco-style RJ45 serial console cable like the Cable Matters one, hook it to the Console port on the Hasivo.

- Start a terminal session, in my case on Linux:

chown uucp:uucp /dev/ttyUSB0

cu -l /dev/ttyUSB0 -s 38400

-

The login is

adminand the password isadmin -

The terminal console UI is a knockoff of the Cisco IOS CLI:

enableto enter administrator modeconf tto enter configuration modeinterface vlan1to configure the admin interfaceip address 10.254.254.115/8(or whatever you want it to be)exitto go back to the interface levelexitto go back to the global config levelshow interface briefto verify the config was applied correctlycopy running-config startup-configto make the changes persistent

-

You can now point your web browser to

http://10.254.254.115/

Some braver people than myself are attempting to get OpenWRT running on the switch, but they doesn’t seem to have succeeded yet.