30 years after, the king of calculators rides again

In 1986, I purchased a Hewlett-Packard HP-15C scientific programmable calculator, for $120 or so. That was a lot of money back then, specially for a penniless high school student, but worth every penny. I lived in France at the time, and HP calculators cost roughly double the price there, so I waited for a vacation visit to my aunt in Los Angeles to get it. HP calculators are professional tools for engineers and you couldn’t find them at the local department store like TI trash, so I asked my aunt to mail order it for me prior to my visit. I still remember the excitement at finally getting it and putting it through its paces.

The HP-15C is long discontinued but I still keep mine as a prized heirloom, even though I have owned far more capable HPs over time (the HP-28C, HP-48SX, HP-200LX and more recently HP-35S and HP-33S) and given most of these away. The HP-15C’s financial cousin, the HP-12C is still in production today and has a tremendous cult following.

The reason for the HP Voyager series’ lasting power is many-fold:

- Reverse Polish Notation (RPN), HP’s distinctive way of entering calculations. For instance, to calculate the area of a 2m radius circle, you would type 2 x2 π x instead of the more common algebraic (AOS) notation on TI or Casio calculators π x 2 x2 =. With practice, RPN is much more natural and efficient than algebraic notation. When I went from high school to college, the number of RPN users went from 2 (myself and a classmate who owned a HP-11C) to over 50%.

- The ergonomics of the calculators are top-notch, from the landscape orientation to the inimitable HP keyboards with their firm and positive response.

- They offered far superior functionality, like the HP-15C’s built-in integrator, equation solver, matrix and complex algebra, or the HP-12C’s financial equation solver.

The HP-15C offers the right balance of power and usability. The HP-48SX was far more powerful, but if you stopped using it for more than a couple months, you would completely forget how to use it. The more advanced functionality like symbolic integration is better performed on a Mac or PC using Mathematica or the like, in any case.

Unfortunately, Carly Fiorina gutted the HP calculator department in one of the more egregious of her blunders during her disastrous tenure as CEO of HP, outsourcing R&D and manufacturing from Corvallis, Oregon to China. HP has been trying to regain lost ground, but it is an uphill battle as TI has had ample time to entrench itself.

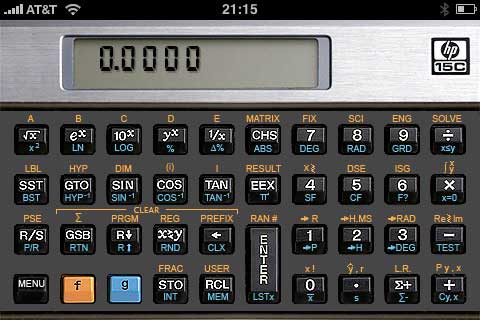

All this long exposition leads to the news HP has released an app (and for other models like the HP-12C)

I benchmarked it by integrating the normal distribution (f LBL A x2 CHS ex 2 ENTER π x √x / RTN) between -3 and +3. On the original HP-15C, this takes about 34 seconds. On the iPhone emulator, it is near instantaneous. On the nonpareil emulator running on my octo-core Mac Pro, it’s more like a minute…